Plenary Abstracts

Name |

Affiliation |

Tentative Title |

| Lorenz Biegler | Carnegie Mellon University (US) | Nonlinear Optimization for Model Predictive Control |

| Francesco Borrelli | University of California, Berkeley (US) | Learning MPC in Autonomous Systems |

| Stefano Di Cairano | Mitsubishi Electric Research (US, Industry) | Contract-based design of control architectures by model predictive control |

| Ilya Kolmanovsky | University of Michigan (US) | Drift Counteraction and Control of Underactuated Systems: What MPC has to offer? |

| Jan Maciejowski | University of Cambridge (UK) | Uses and abuses of Nonlinear MPC |

| Giancarlo Ferrari Trecate | École polytechnique fédérale de Lausanne (Switzerland) | Scalable fault-tolerant control for cyberphysical systems |

| Stephen Wright | University of Wisconsin-Madison (US) | Optimization and MPC: Some Recent Developments |

Lorenz T. Biegler

Nonlinear Optimization for Model Predictive Control

|

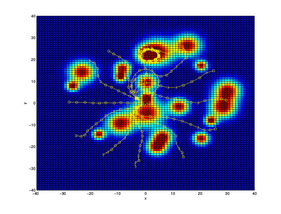

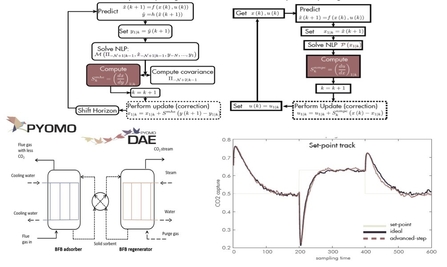

Concepts, algorithms and modeling platforms are described for the realization of nonlinear model predictive control (NMPC) using nonlinear programming (NLP). These allow the incorporation of predictive dynamic models that lead to high performance control, estimation and optimal operation. This talk reviews NLP formulations that guarantee properties for Lyapunov stability and extend to horizon lengths, terminal regions and costs. Moreover, fast algorithms for NMPC are briefly described and extended to deal with sensitivity-based solutions, as well as parallel decomposition strategies for large dynamic systems. Finally, Pyomo, a python-based modeling platform is tailored to deal with dynamic optimization strategies for state estimation, control and simulation, in order to incorporate all of these topics for on-line applications.

|

|

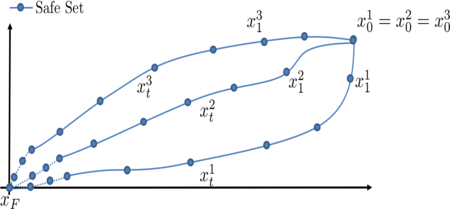

Francesco Borrelli

Learning MPC in Autonomous Systems

|

Forecasts play an increasingly important role in the next generation of autonomous and semi-autonomous systems. Applications include transportation, energy, manufacturing and healthcare systems. |

|

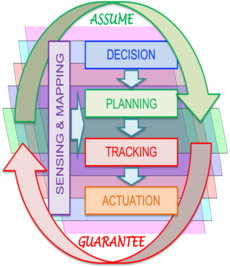

Stefano Di Cairano

Contract-based design of control architectures by model predictive control

|

The autonomous operation of highly complex devices, such as automotive vehicles, spacecraft, and precision manufacturing machines, usually requires an equally complex control software architecture, with several interacting control functions structured in layers and hierarchies. The integration of these control functions is a daunting task, hard, time consuming and expensive, and often the bottleneck to introducing new control algorithms into mass marketed products. In this talk we discuss how model predictive control (MPC) can simplify, if not solve entirely, the design of such complex control architectures. In particular, we discuss how MPC can be used in designing and enforcing assume-guarantee contracts between control functions that allow for their seamless integration with provable guarantees for the resulting architecture. Due to the generality of the enforceable contracts, these can be used to integrate control functions using different abstractions, update frequencies, and computational frameworks, such as in the architecture of autonomous ground or space vehicles, or to integrate control functions with other algorithmic frameworks, such as learning. The potential impact on several industrial domains is demonstrated with applications in automotive, aerospace and factory automation.

|

|

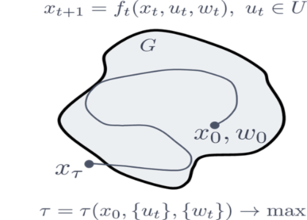

Ilya Kolmanovsky

Drift Counteraction and Control of Underactuated Systems: What MPC has to offer?

|

Motivated by practical applications, MPC formulations suitable for counteracting system drift, the effects of large measured disturbances/set-point changes, and overcoming limitations of under-actuation will be highlighted. For drift counteraction, where the objective is to maximize the time or yield until the system trajectory exits a prescribed set, defined by system safety constraints, operating limits and/or efficiency requirements, MPC, based on mixed integer or conventional linear programming, can lead to effective solutions for higher order systems than possible with dynamic programming and value iterations based methods. Such solutions can have a broad applicability including for fuel optimal Geostationary Orbit (GEO) station keeping, spacecraft Low Earth Orbit (LEO) maintenance, under-actuated spacecraft attitude control, hybrid electric propulsion energy management, glider flight management, and the development of driving policies for adaptive cruise control and autonomous driving. |

|

Jan Maciejowski

Uses and abuses of Nonlinear MPC

|

In reality (almost) all MPC is Nonlinear, just as (almost) all Kalman filters are Extended. But let us concede the terminological convention, that the "N" in "NMPC" refers to the control algorithm, not to the plant being controlled. MPC is one of the class of Receding Horizon Controllers (RHC): (1) Plan over a finite horizon, (2) Decide an action over that horizon and start to execute it, (3) Monitor the effects of the action and re-plan over the same horizon length as before, (4) Repeat indefinitely. "Classical" MPC is the simplest member of the RHC class, and its great success is due to its extraordinary combination of simplicity and effectiveness. As our academic career imperatives drive us to invent more elaborate versions of MPC, we increase its effectiveness slowly, but lose simplicity quickly. As we do so, it is worth asking "Is this still MPC?" Is NMPC still MPC? Do we really need the "N"? The "N" traditionally means "Nonlinear" but more correctly it should be "Non-convex". NMPC may be needed because of non-convexity in the cost function, nonlinearity in the model, or non-convexity of the constraints. My contention is that one very rarely requires a non-convex cost function, and relatively rarely a nonlinear model. But there are many problems in which non-convex constraints seem to be unavoidable. I will illustrate my talk with a few real-life problems, including one in which the dimension of the state-space varies and there are no equilibria; NMPC (or NRHC?) can be made to work well in this case, but even defining stability, let alone proving it, takes us out of our comfort zone. |

|

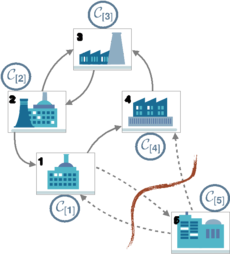

Giancarlo Ferrari Trecate

Scalable fault-tolerant control for cyberphysical systems

|

Technological frameworks such as the Internet of Things, Industry 4.0, and the Industrial Internet are promoting the development of cyberphysical systems with flexible structure where subsystems enter, leave, and get replaced over time. In absence of a reference model, flexibility must be mirrored in the control and monitoring layers, meaning that local regulators and fault detectors have to be designed in a scalable fashion by using information from a limited number of subsystems. In this talk we will present plug-and-play architectures integrating distributed MPC strategies with fault detection algorithms. The goal is to achieve fault tolerance through the automatic disconnection of malfunctioning subsystems and the reconfiguration of local controllers. Approaches for performing these operations safely and without compromising the stability of the overall system will be discussed. The final part of the talk will be devoted to research perspectives in the field.

|

|

Stephen Wright

Optimization and MPC: Some Recent Developments

|

MPC has long been a source of challenges to optimization methodology, because of the need to tackle difficult, structured optimization problems in limited wall-clock time and in a way that meets the requirements of the controller (for example, stability). Recently, the interaction between optimization and MPC has taken on a new dimension, with new proposals being made for the use of machine learning (and thus optimization) in control strategies in which the models are learnt rather than specified. We review several of these developments from an optimization perspective, and speculate about how recent developments in nonconvex optimization and in MPC might impact each other in future. |